Our Review System

In light of all these difficult questions, I’ve tried to base the review system on a few guiding principles:

-

- Humans are better at comparing than scoring

- Comparisons only work between fairly similar things

- Try to be clear about what you’re rating, and why you’re rating it

These ideas inform all the choices that go into rating a rum. We get to a rating using the following rubric:

-

- Blind Neat Score: 25%

- Blind Mixed Score: 10%

- Transparency Score: 10%

- Presentation Score: 5%

- Personal Review Score: 50%

Below we’ll describe the basic process we use to arrive at each individual score, followed by some scenarios where we deviate from the basic template.

The blind taste test

The first step in any rating is to taste it without knowing what it is. The main goal is to avoid having preexisting opinions affect a rating. For example, maybe I’ve heard that “Spanish style” rum is unpopular among rum nerds. If I go in knowing I’m sipping a Spanish-style rum, my knowledge of broad rum trends can affect how I view it. There are a couple key features of our blind taste tests we keep consistent:

-

- A rum is always tasted alongside at least 2 other rums that are similar in many qualities (input, fermentation, distillation, age and proof).

- A rum is always tasted by at least 2 tasters, who’s scores will ultimately be averaged together. Tasters must establish their own ratings before discussing with others.

- A rum is tasted first neat (in a glass with nothing else), and then in a daiquiri. It will receive separate ratings as a neat sipper and as a mixer.

As a result of this process, we end up with a neat score and a mixer score.

I think the choice to always base our “Mixer” score on a daiquiri is probably the most controversial decision in our entire method. On the one hand, a daiquiri is a drink that is very easy to make consistently, and it is simple enough to let the character of the rum shine through. It also gives a very clear indication of how the rum will perform in a variety of citrus-forward drinks; making an old-fashioned wouldn’t really tell you if a rum would make a good Mai Tai.

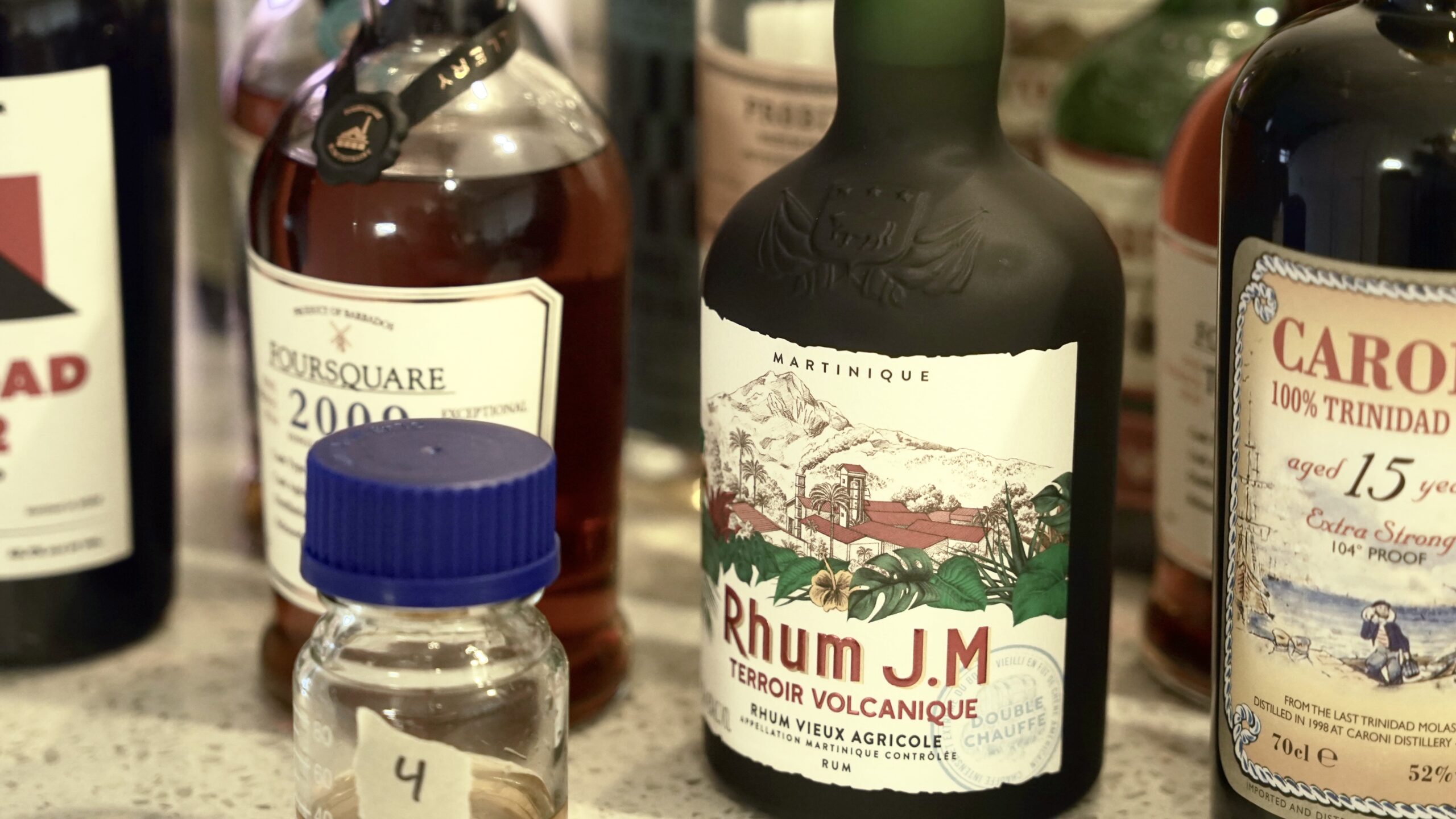

On the other hand, not all rums are daiquiri rums, and that’s totally okay! When tasting Foursquare Exception Cask Selection releases, it feels kind of silly to mix a daiquiri, because that’s not what those rums are for.

Ultimately we’ve chosen to stick with the daiquiri because there’s not an obvious alternative; you could match the cocktail to the rum type, but that opens up a whole new can of worms and makes it difficult for ratings between tastings to be meaningful. If rum A got a high mixer rating in a Jungle Bird, and rum B got a low mixer rating in an Old Fashioned, it’s difficult to know how they stack up against each other.

Research

Although we’re rum nerds and likely have some opinions about the kinds of rums we’re tasting before we do any reading, we purposefully put off all the additional research that goes into a review until after the blind test. Again, this lets us keep our blind reviews as unbiased as possible.

After the blind taste test, however, we like to learn as much as possible about the rum. First we’ll make sure we have all the basics: country of origin, input material, fermentation length, distillation method, minimum age, and proof. Then we’ll learn as much as possible about the history of the rum; when was it originally released? Is it a limited release? How does it express the distillery’s style? Besides the bottle itself, some of our favorite resources are Modern Caribbean Rum by Matt Pietrik, Rum: The Manual, and the RumX database. At this point, none of this directly determines any part of the rubric, though some of it feeds into our transparency score.

- Next, we do some good old-fashioned lab work. We measure the refractive index of the rum with a refractometer, and then the specific gravity with a digital density meter. The former is just because we’re nerds and like data, and the latter gives a general sense of if there was anything added to a rum (in some rum styles it’s common to add sugar after distillation, and everyone has opinions about this). We include this as contextual information in the review and the estimated additives may inform the transparency rating.

To establish a transparency rating we look at how easy it is to find information about the rum. For our purposes, if a piece of information is on the bottle or the official website of the producer, this is “transparent”. We look for the following information:

-

- Input material

- Fermentation time

- Distillation method

- Minimum age

- Proof (this is kind of a freebie because it’s legally required)

- Presence of additives

- Generally speaking, if all 6 of the above are available on the bottle or website, the rum would get an 8/10 for transparency. We reserve those last 2 points for transparency that goes above and beyond, but that may not be applicable in every case. For example, sharing the cane varietal, additional fermentation techniques, the specific still it was distilled on, and information about the casks it was aged in may all earn the rum bonus points for transparency. Including information prominently on the label may also earn bonus points.

- Presence of additives is a bit of an oddball:

- We don’t penalize a rum without additives for not saying “no additives” on the bottle

- We don’t penalize a rum with additives as long as they say clearly say what’s added on the bottle

- If a rum doesn’t declare any additives, and we find it to have substantial amounts of additives (>15g/L), the rum gets a -3 penalty.

- After assessing transparency in a fairly objective way, we do one of the more subjective assessments: presentation. While it’s not the most important thing in the world, we believe the quality and aesthetics of a bottle can improve the subjective experience of the rum. Again, most reviewers will be looking at the bottle while evaluating the rum, so whether or not the bottle looks nice will implicitly affect their rating. When we do a blind taste test we purposefully do not know what the bottle looks like, so we intentionally and explicitly share our thoughts in this section. Beyond whether or not it looks nice, whether or not the bottle feels sturdy, and whether or not the closure is secure and easy to get on and off affect presentation as well.

- As part of our research, we also make sure to record the price, and come up with a value score. “Value” is a tricky concept, and we purposefully leave it out of our overall score for a couple of reasons:

- Prices for the same rum vary in different parts of the world, and we can only assess value based on the price we paid for a rum

- Prices are non-linear; while most standard release rums fall somewhere between $20 and $50, many special releases easily reach $200, and there are plenty of rums that can now only be found on the secondary market for more than $1k.

- Some rums genuinely compete on price (e.g. Bacardi Superior) and some rums are so rare price is almost irrelevant (a la The Appleton Estate 17-Year-Old Legend, should we ever be so lucky to get our hands on some).

Personal Review

Once we’ve fully assessed all these parts of the rum separately, we attempt to bring it all together in a personal review. We have opinions, and we think it’s important to make it clear a review is coming from a point of view. Often days or weeks after the blind tasting, we’ve had plenty of time to sit with a rum and see how it fits into our lives. Do we have go-to drinks for it? Does it pair well with a particular food? What tasting notes have we discovered that we missed in the blind taste test? Importantly we’ll actively consider contextual information: is this a historically important rum? Is this rum accessible to newcomers? Does the producer use deceptive marketing tactics?

The result of this process is a long-form written review, as well as a personal rating; because it considers all of the individual factors in context, we give the personal rating the highest single weight in our rubric.As of today, that’s the long and short of how we review rums. As we said at the top, a lot of different considerations went into coming up with this process, and while this is the way we love to review rum, we’re in no way saying this is the only right way to do so. We feel confident about the choices we made, but there are many we’re open to revisiting.